Another week down, another recap to share! This week was all about preparing for the first round of Tradeoff Testing that took place today. Both the research and design teams were busy making refinements to be ready for Thursday’s pilot session and the real thing which happened this morning!

We focused on making refinements to the test plan and the two opposing design concepts we planned to present and gather feedback on.

In this week’s recap we’re covering:

- What is Tradeoff Testing?

- Look and Feel Explorations

- Final Concepts for Testing

- Recap of Tradeoff Testing Session 1

In case you’re just tuning in, we are creating an AI product for politics from the ground up in 10 weeks. The product concept is a personalized control panel that provides a "safe space" for users to engage with AI while interacting with political content. This tool empowers users to identify, control, and understand AI-generated material, helping to combat bias and misinformation in the political landscape.

To get caught up on how we got here read through the weekly recaps: Week 1, Week 2, Week 3

Let’s dive into what went down during Week 4 of Project Opus!

Tradeoff Testing is a research approach where participants are shown two drastically different design concepts to spark reactions and gather information on their preferences and priorities. It’s a helpful form of testing to understand what features are most important to them and why, especially when people have conflicting viewpoints.

By showing users concepts on opposite ends of the spectrum, we get them thinking about what works and doesn’t. We’re intentionally presenting "out there" ideas and things we know won’t be part of the final solution to get raw, honest reactions.

We had a few key goals for the session:

- Capture users' reactions and preferences when presented with each of the design concepts to help prioritize levels of analysis, behavior disruption, guidance, granularity, and appropriate entry points for a tool like this.

- Gain deeper insights into any biases around AI, specifically in the political context.

- Understand how participants envision using a tool like ours and what would encourage them to adopt it.

The insights we gather from this testing help us identify which design direction to pursue, or if there are successful elements from each concepts that can be combined in a blended design that delivers across the needs of all differing viewpoints.

We’ll get into more details about the session breakdown later in the post, but this week we conducted the first tradeoff testing session. It was a remote focus group with 4 participants.

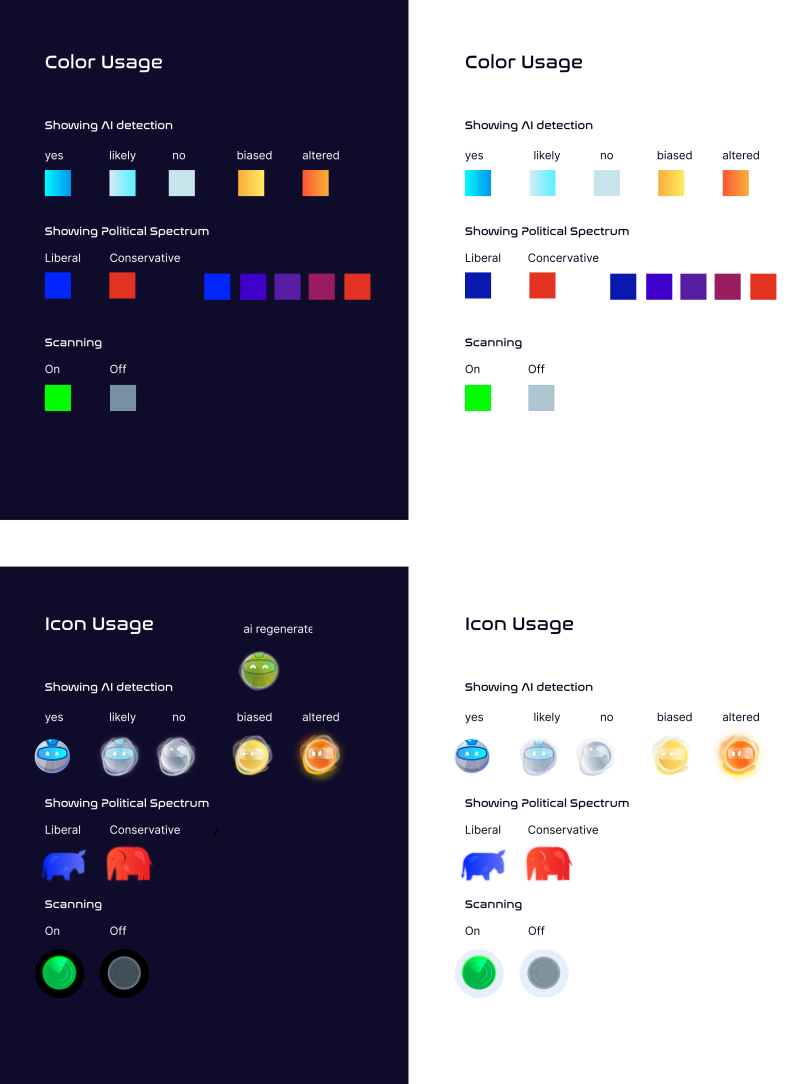

The visual design evolved a lot this week! Cygny, our Art Director and Visual Designer, explored both light and dark modes for the look and feel, but ultimately the team decided to lean into a darker theme. We want the design to feel sleek, futuristic, and reliable without being too overwhelming. Although we’re moving forward with a dark theme, this AI tool is mobile-first, so we’re making sure it adapts smoothly between light and dark modes to accommodate user’s setting preferences.

The design library got built out and focused on colors, icons usage, and other visual elements.

One of the design concepts we’re exploring features a sticky element that stays with users as they browse online, and Cygny is thinking up how this icon (or character) might look like. This little guide would inform users of any AI-generated content they’re viewing, subtly helping them stay aware without being intrusive. It needs to be passive enough that it’s not in the way without feeling aloof.

The look and feel will continue to evolve, but with an initial direction locked down, we moved on to applying those styles to the two tradeoff testing concepts. We want to bring the designs to life for users so they can see and imagine themselves using them, applying visual design at this stage helps them feel real.

Let’s look at what each concept and the features being tested look like with the visual design direction applied.

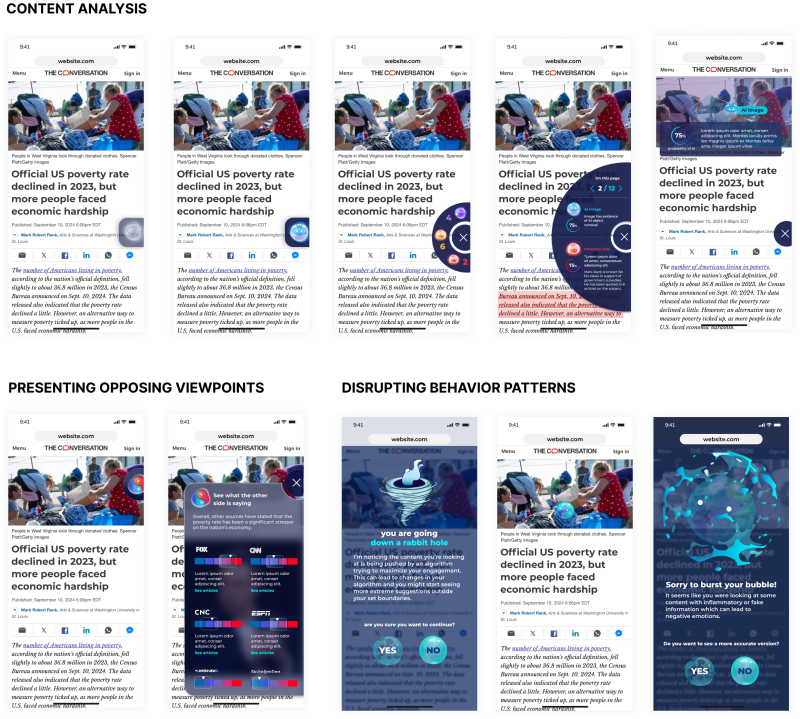

Concept 1: Passive

This is a plug-in solution aimed at users who value transparency but don’t want too much disruption. This approach passively monitors the content they browse, highlighting things like bias, misinformation, and AI-generated content. Features we’re testing include:

- Identifying AI-generated content

- Highlighting the sources behind news stories

- Summarizing what’s being said on the same topic from multiple sources

- Factchecking and flagging biased or misinformation and generating a more balanced version

- Presenting what the opposing viewpoint is saying about a topic

Here's a peek at what they looked like.

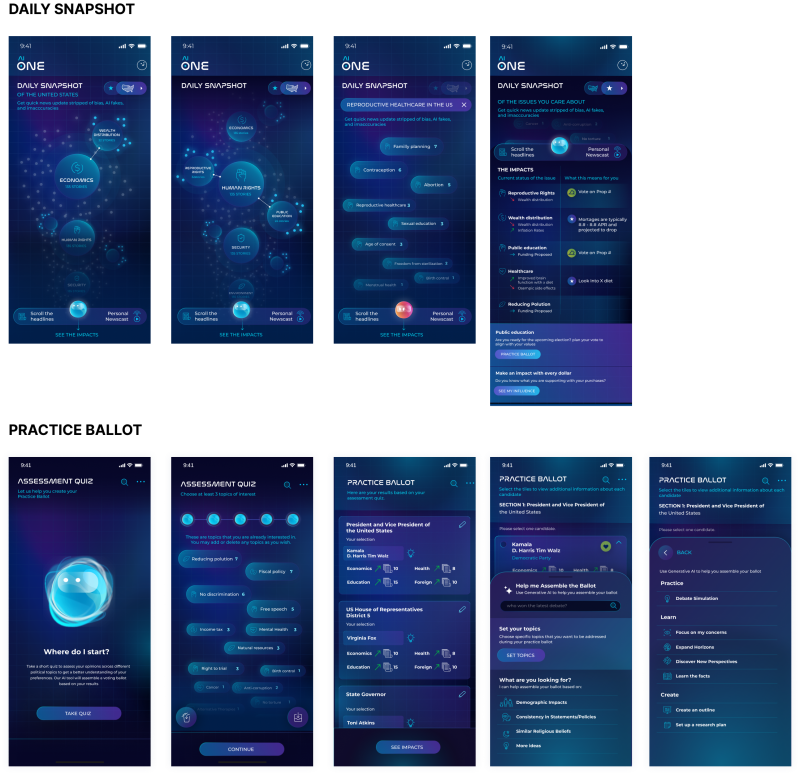

Concept 2: Guided

This concept is aimed at users who feel overwhelmed by political content and tend to avoid it. It’s a web-based app designed as a supportive guide to help you understand bias and potential misinformation as well as make decisions, especially around voting and understanding policy impacts. Some features we’re testing include:

- Daily Snapshot: shows key political trends and summaries tailored to the user’s interests, giving them a well-rounded and quick understanding

- Headline Playlist: lets users add topics and news stories they’re interested in into a personal queue, where AI analyzes content in real-time and provides a personal newscast

- Practice Ballot: guided quiz for users who are unsure where to begin with voting, guiding them through the topics that matter most to them and policy impacts based on how they vote

Those looked a little something like this.

As you can see, these approaches and features are drastically different from one another, and that’s what we want for this initial round of testing.

Both concepts offer ways to analyze content, dive deeper into topics, and have guidance in their own unique way. By presenting such distinct approaches, research participants will be pushed to decide and speak to their preferences, priorities, and the why behind them.

To see how we arrived at pursuing the Passive and Guided design approaches, check out last week’s recap.

It’s Friday, and the first tradeoff testing session took place remotely this morning, with 4 participants joining the 60-minute focus group. The session was designed to gather insights on feelings toward AI-generated content, habits around political information consumption, and of course gathering feedback on the opposing design concepts.

The session was broken up into 4 sections:

- Intro & Icebreaker: Establish a relaxed, open, and comfortable conversation space.

- Exploring Biases & Perceptions: Understand participants' experiences with political content and AI.

- Reaction to Concepts: The heart of the session, focus on participants' feedback on the two concepts.

- Free Discussion: An open-ended conversation around the potential of an AI-powered tool like this for navigating political content.

Overall, participants’ reactions to Concept 1 were positive, and they found value in the tool’s ability to monitor content and provide additional context on AI-generated or altered material. However, some participants were concerned about data privacy and how the system might track their online behavior. The use of color in the concepts was also a point of confusion for participants, leaving them questioning the difference between status colors and colors meant to represent political affiliation.

Unfortunately, the hour was up before we were able to show any of Concept 2, so while we gathered some insights, the session missed the mark on being a true tradeoff test. Where does that leave us? We have 3 business days to conduct another tradeoff testing focus group to gather the insights we need to make our next design decisions and stick with the project timeline.

The team is already recruiting for another session on Wednesday which will be 90-minutes instead of 60. We are also adjusting the test plan by cutting the icebreaker and shortening the bias discussion to focus more time on both concepts.

- Challenge: The big challenge is finding the right balance in these focus groups. You want to let people share their thoughts freely, especially when the conversation is flowing, but at the same time, we only have a limited amount of time and a lot to cover. It’s a tough one, especially when people have a lot to say about the political subject matter.

- Whoops: Time was the biggest “whoops” of the week. We spent too much time in the beginning with the icebreaker and initial questions, which left us rushing to get through the actual concepts during tradeoff testing. But hey, lesson learned! We’re cutting down on the intros next time and only focusing on the concepts.

- Win: On the bright side, even though we only presented one concept, the participants were really engaged. Their feedback indicated excitement and the potential value of a tool like this, especially around preventing bias and misinformation. It’s encouraging to see that we’re aren't the only ones who think a tool like this would be helpful!

Next week, we’ll be running another round of tradeoff testing to get the feedback we need. To continue making progress on design while we wait for all the insights, the design team will be diving into interaction states and exploring preference setting pages. Once we complete the second session, we’ll share all the insights and what they mean for the next phase of design. Stay tuned!

Need a little refresher on how we got here? Check out the weekly writeups below and follow us on LinkedIn to view video summaries.

- Week 1 Writeup & Recap Video

- Week 2 Writeup & Recap Video

- Week 3 Writeup & Recap Video