Welcome to the Week 7 recap of Project Opus! We have one more round of testing (Yes, you read that right… More testing.) to complete before we head into the final design stretch of the project. Our focus this week was split between updating designs based on previous focus group feedback, finalizing a storyline for this week’s user research, research prep, and recruitment. Oh, and we also conducted 7 in-depth interviews (IDIs).

The week went by in the blink of an eye and it was all hands on deck. Here’s what we’re covering in this week’s recap:

- In-Depth Interview (IDI) Prep

- Design Updates for Testing

- IDI Sessions and High-Level Findings

Let’s dive in!

The beginning of the week was spent finalizing the test plan and recruiting participants to interview for Thursday and Friday’s sessions. We were looking to run 7 remote user interviews, each 90-minutes, and sought to recruit a range of participants. From those politically disengaged individuals to those who actively keep up with political news. Their engagement with political content varied widely, some reporting that they form opinions ahead of elections and others interested in consuming political content simply to stay informed.

Our main goals for this final round of user research were to apply the design changes from previous rounds and allow users to explore and interact with a working prototype. We also wanted to uncover:

- How AI summary information was received by users and if the right level of detail was shown.

- Does the navigation flow feel like a consistent, seamless experience for users when transitioning between the app and the integrated plugin feature?

- Is the AI chatbot experience intuitive, or does it need improvement?

- Would this tool help you have a more accurate understanding of politics and political news?

- And of course, collect data on users understanding of the 4 main features of the tool (Daily Snapshot, Personal Newscast, Content Analysis, and Practice Ballot) to ensure an intuitive, valuable experience.

To gather all these insights within the 90-minute sessions, the test plan was broken up by each feature with subtasks and data collection goals within the prototype for each screen. While our researcher, Alice, got to work finalizing that test plan and recruiting seven all new participants, the design team was busy getting the product screens ready.

Design had 3 main goals at the beginning of the week in preparation for testing.

- Update the product’s key features with design implications gathered from the previous focus groups.

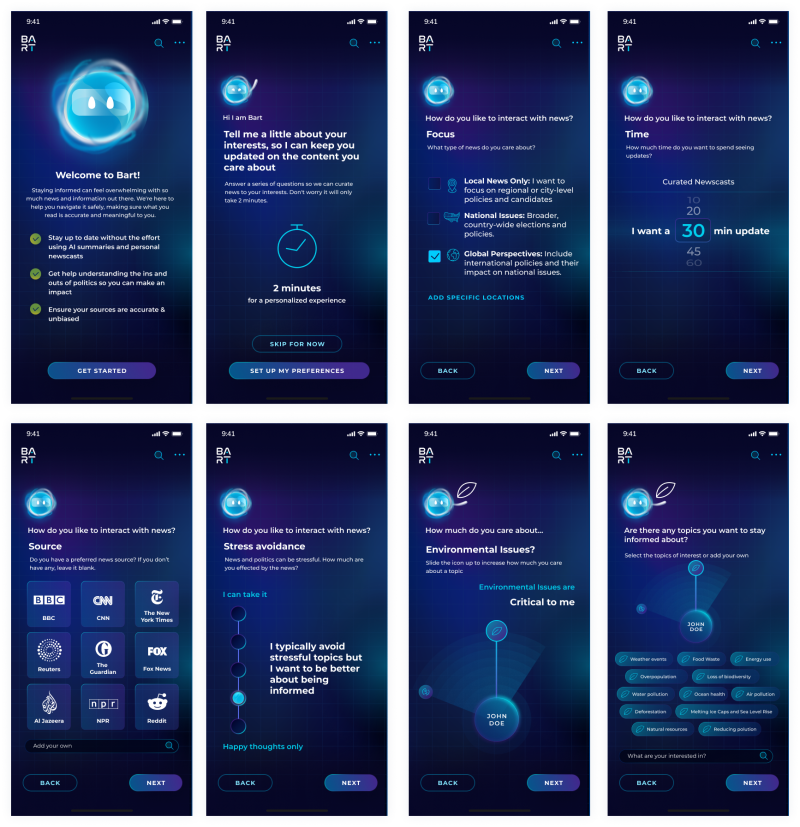

- Flesh out what the story for testing needs to be to gather the data needed for final design changes, and add more color to the tool’s onboarding process to help users understand how the app can be used to their benefit.

- Prototype the updated design screen in Figma to give participants a clickable prototype to interact with during testing.

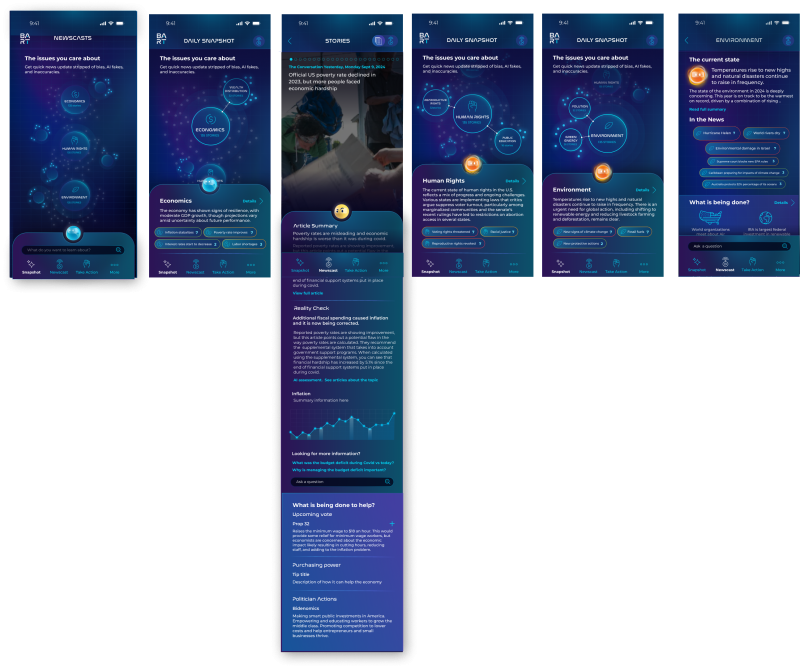

From the insights uncovered in the previous tradeoff testing focus groups, we updated the designs and applied the look and feel to new screens to be able to create a prototype for testing. We did run into a few hiccups with prototyping in Figma.

Some of the design screens with adapted look and feel were created in Illustrator and brought over to Figma, which posed a challenge when prototyping certain components. Because testing was only a few days away, we needed to leverage higher fidelity wireframes in some cases.

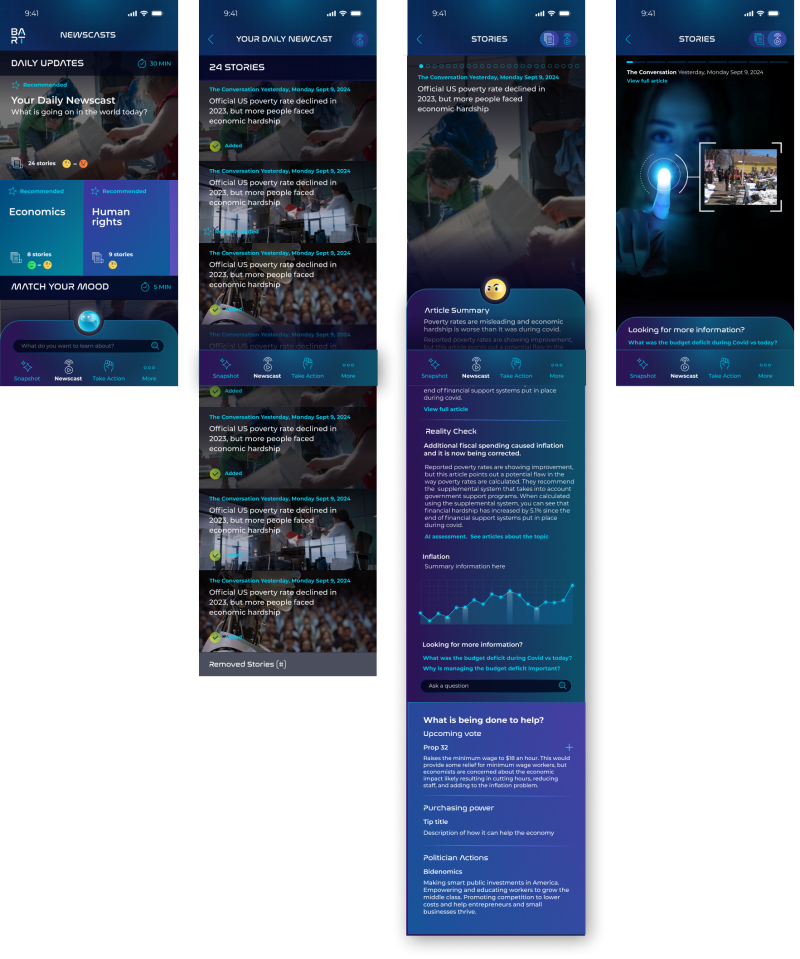

It was all hands on deck leading up to the 7 remote IDI sessions on Thursday and Friday. Throughout the sessions, participants interacted with the tool’s onboarding flow to gather political preference and interests as well as 4 core features: Daily Snapshot, Personal Newscast, Content Analysis, and Practice Ballot. For each feature and subtask, our team observed how participants navigated through the tool, engaged with AI-features like article summaries and AI detection, and uncovered how well the design fostered trust.

Research Goals for Each Feature:

1. Daily Snapshot

- This feature surfaces activity levels and news on the topics of interest people specify to get a quick pulse of what’s going on locally, nationally, or globally. It provides news summaries with an option to dive deeper. For testing, we wanted to know if the AI summaries offered the right level of detail, if the data visualization was understandable, and whether the AI-generated information garnered trust.

- Key questions: Did users feel informed without being overwhelmed? Were the summaries quickly digestible yet actionable, allowing for deeper dives into information if they wanted more context?

2. Personal Newscast

- This feature lets people get a tailored newscast based on their interests with the ability for them to specify how much time they want the newscast to take. Ex. a quick 15-minute update on all topics they care about or longer deep dives when they have more time. We wanted to know if the new design had the right balance between informative and engaging.

- Key questions: Did the tailored newscast help users feel more informed quickly? Was the navigation intuitive? How do they see themselves using this feature in their daily routine?

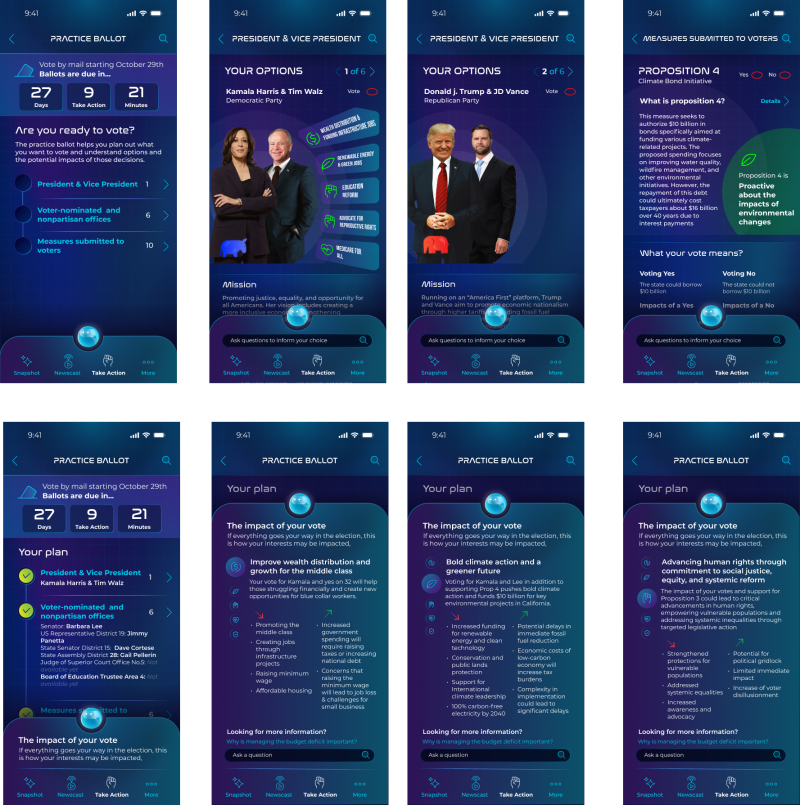

3. Practice Ballot

- This feature brings a layer of action to the tool, allowing users to better understand policy, delegates running for office, and political stances. All with the goal of informing people neutrally and giving them the information needed to make their own informed opinions. We wanted to understand if the design of this feature helps make things approachable and actionable for users and if information is presented correctly based on delegate/policy information vs. user topics of interest.

- Key questions: Did the ballot make the voting process more approachable? Did users feel prepared or empowered to vote confidently, and was the flexibility (jumping between sections) helpful or confusing?

4. Content Analysis

- This feature offers AI-generated summaries of articles, on screen annotation of AI-generated content, bias, and misinformation around political topics, and moments of behavior disruption to notify users when they are going down a “rabbit hole” of content. We wanted to learn how much control users wanted over the content they engage with and whether they found the AI-generated summaries helpful.

- Key questions: Did AI summaries offer the right amount of information? How much control over content engagement did they want? Did they perceive the AI as overstepping its role by curating too much?

The design team also built out the onboarding sequence and added more color to the process to help users understand during testing how the app can be used to their benefit and get them on board with the idea of selecting topics and the level of interest within politics.

The research team is off synthesizing the findings and writing a research report, which they will share in a report readout with the whole team next week, but some clear findings have already emerged. The design team sat in on the live sessions and is already reacting to feedback collected.

High Level Takeaways

- Rethink Emotional Indicators: Users found mood-based emojis unnecessary and were uncomfortable with the system attempting to predict or influence their emotional response to news content. Instead, the tool should allow users greater control over how they engage with information and foster self-regulation over time.

- Clarify Emotional Impact: Clearly define how stress and negative emotions are measured. Emotional responses vary depending on individuals lived experiences; a one-size-fits-all emotional framework risks misalignment with personal responses and nuanced events.

- Emphasize Trusted Sources: Participants are comfortable with AI in general, but political content presents unique challenges around bias, misinformation, and polarized perspectives. AI-generated summaries can simplify complex topics, but their effectiveness hinges on user trust. To build that trust, it’s essential to clearly disclose the sources behind these summaries and explain the reasoning and criteria driving recommendations.

- Consolidate Features: Newscast and Snapshot features share overlapping goals and should be a consolidated balance between providing detailed content summaries when needed and quick, direct access to information in other moments.

- Visual Design: Participants want to see a simplified interface, feeling the tool was overdesigned in moments and leaving some data visualizations unclear. Typography needs to be revisited for legibility and a less “outer space” feel.

“I would like to be able to choose the content I am interested in following—curated content based on my expressed interest, not on search history.” -Participant #7

“It’s very important for me to be not biased and to have an open mind, and one of the ways [to do that] is to be able to see the multi-facet of issues.” -Participant #3

- Challenge: Managing screen space on a mobile app is always a challenge. There is a lot of material and value we are trying to squeeze into the tool, but only so much screen real estate to work with. Continuing to prioritize information that keeps people in the know of the topics they care about before diving into granular details is our focus.

- Win: Our 3rd and final round of user testing was completed for this project! We believe in testing early and often to ensure our direction and changes align with what users want and will find valuable. We’re excited to synthesize this last round of findings and apply the final design changes!

- Whoops: Some of our design screens that have the visual look & feel applied were created in Illustrator and then imported onto Figma. When it came time to prototype in Figma presented, we ran into technical hurdles trying to prototype a handful of components together. The team turned it around and got a working prototype together in time for testing! When it comes time to do final design renders, we’ll be creating everything in Figma.

Since this was the final round of testing, we wanted to hear what the team was surprised by as they sat in on the IDIs. Here’s what they had to say!

“These sessions have shown me that most people are not ‘afraid’ of AI, they just want to make sure that if they are using it, it is specific enough to address their needs. Having a simplified summary with top highlights in articles isn't enough to drive a need for something like our tool, so I'm excited to explore how we can make it even more resourceful.”

– Celeste Alcon, UX Designer

“I wasn't expecting the newscast feature to be so popular, but it makes sense—for a lot of participants, the sheer amount of news sources out there requires so much work to go between and you must be diligent if you want to get well-rounded information. so having a tool that can pull all of that and take that burden off was valuable to people.”

– Sarah Field, Product Manager

That’s right! During testing and throughout the design, we’ve been calling the tool and the personified character that’s with users at each step, Bart. Why? Well, if we’re being honest, we just needed something general to use in testing, but the name never felt right and didn’t resonate with participants.

We’re excited to share we’ve given our AI tool and helper a new name—Poli! Your tool for unbiased political engagement, powered by AI-driven transparency.

With only a few weeks left in the project, the finish line is in sight. Next steps involve synthesizing the IDI research findings and presenting them to the team and enter the final design stage. We’ll be finalizing visual design, all screens based on research findings, and then moving to rendering the final design.

We’re nearing the end, but we have a lot of design work still ahead to bring this project to a close. If you’ve been following since Week 1, we hope you’re excited by this project’s evolution, and we can’t wait to share the final product.

If you’re just tuning in, get caught up on weeks passed through the writeups below and follow us on LinkedIn to view video summaries!

- Week 1 Writeup & Recap Video

- Week 2 Writeup & Recap Video

- Week 3 Writeup & Recap Video

- Week 4 Writeup & Recap Video

- Week 5 Writeup & Recap Video

- Week 6 Writeup & Recap Video